Nowadays it’s nearly impossible to keep up with all the hipster technologies and fads. One minute you’re using the latest "cool" tech and before you know it’s been superseded by something much better that everyone will tell you to use right away. Once in a while every developer wakes up in a cold sweat worried that the JavaScript framework they were learning yesterday is no longer cool. Right? Just me? Hmm okay then…

With the ever-growing shift towards being cloud-only we’re seeing a new category of technologies that it seems we need to be aware of – I’m referring to "Containers" and container-orchestration software. The goal of these tools is to make developers lives easier by abstracting away the need to manage servers or configuration and instead just focus on their code.

What are containers?

Containerisation makes it possible to “package up” all of the dependencies needed to run your code; from the reduced versions of the operating system and web server software, to system configuration, to the application and all it’s DLLs. The output is a collection of files referred to as an “image”. These images can then be deployed to servers where the relevant containerisation software will provision a standalone instance of the applications that it contains that are run them isolation.

Containers help ensure the setup of each environment is exactly the same. Imagine discovering all too late that your code doesn’t work on production, despite it working on all of your test environments due to a simple difference in server configuration… This is where containerisation becomes particularly valuable!

Developers can write their code plus install and configure everything needed for it to run inside the container. As the same image running on that developer’s local machine will be the image that gets deployed to further environments it’s quick to identify if anything is missing early-on otherwise the application simply won’t run.

Gone are the days of “works on my machine” – code can run everywhere!

Well known technologies you might have heard of include Docker and Vagrant.

What is orchestration?

Container-orchestration software, such as Kubernetes, gives granular control over deployments of your containers, including scaling and distribution across multiple servers / clusters in different locations.

It actively monitors the health of each container and will automatically attempt to self-heal by re-deploying when issues arise. You are also given granular control over the internal networking (via private DNS) between different deployments, auto-scaling rules, and the clusters / servers which different containers should be running on.

When running in a cluster, containers are automatically moved between machines to appropriately handle load. Rather than over-loading the resources used on one single server, the orchestration software will evenly spread the processes running across multiple servers in the cluster. This is particularly valuable where running tasks on a schedule, for example, as these can be run on servers within the cluster that are currently experiencing the lowest load.

Most orchestration software can be installed directly onto a server, though there are managed hosted clusters available on Azure via AKS and Amazon via EKS. Another option is Microsoft’s own Azure Service Fabric which can be managed or self-hosted on Azure. It is typically managed via a command line interface, though there are products out there that offer a GUI.

Containers in practice

There's certainly a lot of hype around containerisation, with many startups and big tech companies jumping on the Docker / Kubernetes bandwagon as their preferred hosting method of choice. Besides the obvious deployment benefits, it helps saves significant amounts of time troubleshooting issues on individual's machines as teams grow larger because developers can have an identical environment up-and-running without much effort.

Part of the reason these 2 tools have become the popular choice is pretty clear: they're both incredibly performant and reliable, actively maintained open source pieces of software with thriving communities – just like Umbraco!

But do these technologies fit in to the Umbraco world? What are the downfalls? Should you consider them for your next project?

Containers: Docker

Despite all of this sounding pretty complex, Microsoft have made it relatively straightforward to get your ASP.NET MVC website (running Umbraco) working within Docker.

Running Umbraco in Docker

You'll need Docker installed on your machine in order to run or create an image for your application. The Docker for Windows installer is available to download for free from the Docker Store (~550MB).

Once the installer has completed run the following in the command line to check it's installed correctly:

> docker infoYou should see some details about your setup, such as number of containers running.

Thankfully it's not entirely necessary to learn the extensive Docker CLI commands! Visual Studio 2017 makes it simple to convert your existing applications into Docker images directly from the IDE.

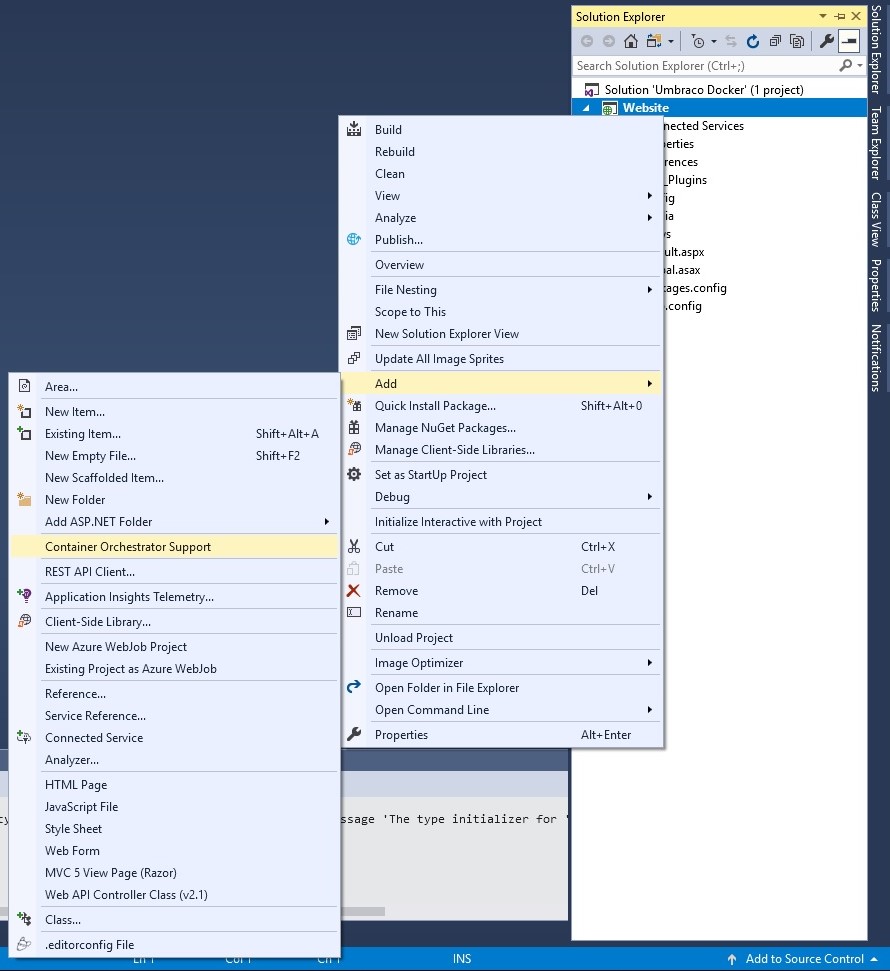

Within the Visual Studio solution for your Umbraco site, right click on your Web project (where you have installed Umbraco), navigate to Add in the menu, and select "Container Orchestrator Support".

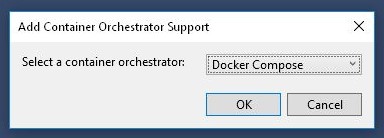

A dialog will appear with some options for container technologies. Choose "Dockerfile" and proceed. If prompted switch the container mode to "Windows"; this tells your container software which operating system to target.

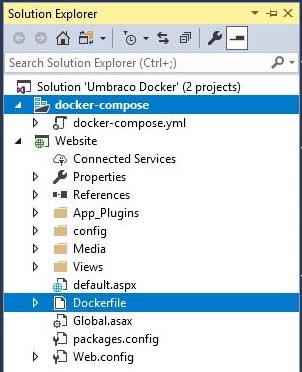

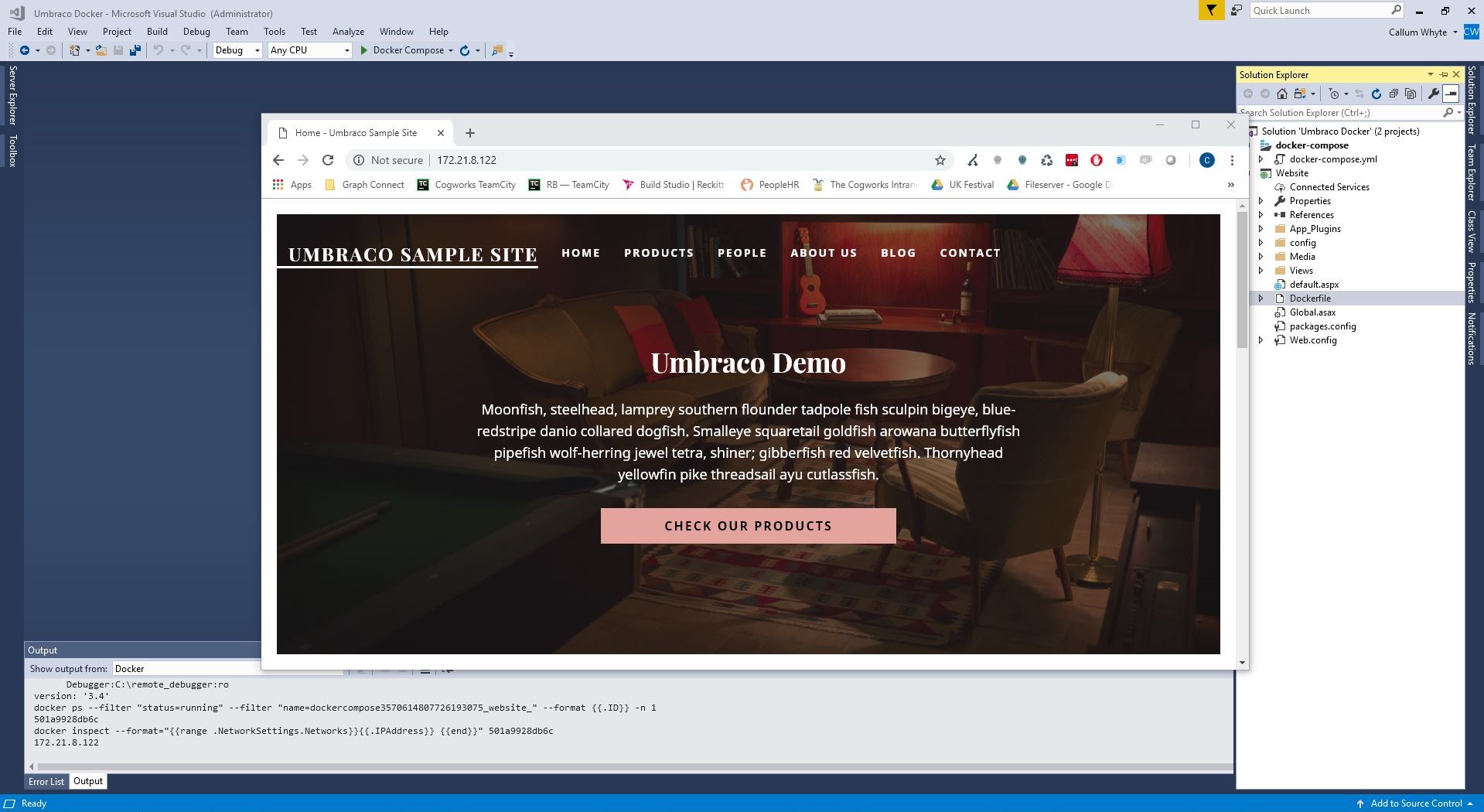

This will create a new project in your Visual Studio solution called "docker-compose" along with a file in your website root called "Dockerfile". It should look a bit like this:

The "docker-compose.yml" file tells Docker which services to create and points it to the location of the image that needs to run. It is based on YAML syntax.

version: '3.4'

services:

website:

image: my-umbraco-website

build:

context: .\Website

dockerfile: Dockerfile

ports:

- "8080:80"

- "127.0.0.1:8001:8001"

environment:

- ConnectionString=Server={HOSTNAME_HERE};Database={DB_HERE};User Id={USER_HERE};Password={PASSWORD_HERE}The ports on which the service should run can be defined as a list. In a similar way, environment variables such as appsettings or connection strings can be defined here.

The "Dockerfile" contains all the instructions needed to create an image of your application, such as paths to copy files to, and the dependencies the image has.

FROM microsoft/aspnet:4.7.2-windowsservercore-1803

ARG source

WORKDIR /inetpub/wwwroot

COPY ${source:-obj/Docker/publish} .The "FROM" declaration on the first line tells Docker which base images it should pull down, in this case "ASP.NET 4.7.2 for Windows Server Core" which will allow us to run .NET applications within IIS on Windows.

Build the solution in Visual Studio to generate your Docker image!

WARNING: the first build will take some time! Docker needs to download a "nano" version of the required operating system (without any GUI) into the image in order for the application to run. In cases of "Windows" Docker containers this is ~10GB in size, whereas "Linux" containers are significantly smaller ~500MB. As Umbraco runs on .NET Framework, and therefore only Windows, this large download is necessary. If in future Umbraco can run on .NET Core and Linux this download can be much smaller.

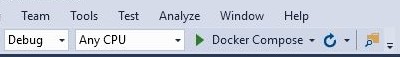

While it’s possible to run your Docker image directly from the CLI, it’s also possible from the Visual Studio 2017 UI. Previously clicking "Run" (or Ctrl + F5) on your project would open your site in a new browser window. Now the "docker-compose" project exists the relevant commands will run instead, causing Docker to run the image of your application with an internal IP address assigned and open a browser with it running.

Considerations

Hosting in a container is not like any ordinary server setup which can make troubleshooting issues more complex. As there is no GUI to manage your environment (no OS, no IIS) you need to rely on the logs from your application and from your container tool itself to tell you what's going on.

Furthermore, as the container image is generated at build time only files in the image will be persisted with each deploy and everything else will be overwritten / lost forever. Containers do not provide "persistent storage" for things such as Umbraco media, file system caches or SQL databases that are always needed. While it is technically possible to host storage services or SQL Server instances within containers, it's advised to offload these onto externally hosted services such as Azure Blob Storage or Azure SQL. As these services live on the wider data-center network rather than locally there may be added latency with this approach. Due to this applications that rely heavily on the local disk for performance reasons may not be the right choice for containerisation.

Finally, networking within containers can become slightly more complicated as each container is the equivalent of an isolated virtual server with its own networking rules. Due to this none of the containers or other services running (e.g. SQL server) can talk to each other over "localhost" – in this scenario "localhost" is the current container, not the host machine (the server running the container software itself). Each container and service has its own IP address on the host machine's internal DNS which should be used for communication. Communicating with services hosted on the public internet can still be done using public DNS.

Deployment: Kubernetes

While it is possible to run docker directly on a server in isolation it can become increasingly difficult to manage as your application needs to scale or you need to perform deployments with minimal downtime (Docker on Windows can be slow to start).

Kubernetes appears to be the flavour of choice when it comes to container-orchestration. There are a wide range of choices when it comes to hosting Kubernetes, from managing your own clusters to using a hosted option on Azure or AWS.

As with Docker, Kubernetes configuration is managed through a YAML based text file called "deployment.yaml". It allows you to define a spec for your deployment as well as meta-data (name, version, labels) to easily identify it running in production.

Kubernetes deployments can be triggered via the "kubectl" command line interface.

Example Kubernetes deployment configuration:

apiVersion: apps/v1

kind: Deployment

metadata:

name: my-umbraco-site

spec:

selector:

matchLabels:

app: umbraco

template:

metadata:

labels:

app: umbraco

spec:

containers:

- name: my-umbraco-site

image: my-umbraco-site:1.2.3

ports:

- port: 80

- containerPort: 8001In this example configuration:

- "name" property is the name of our deployment

- "image" is the Docker image we need deployed (created in the previous section)

- "port" is the port we want our service to respond on publicly

- "containerPort" is the port our container is running on internally

With some additional settings it is possible to configure replicas, auto-scaling, load balancing and deployment policies for minimal downtime during deployments.

Considerations

As with Docker there is significant effort required to configure clusters and the relevant networking that is probably best left to a dedicated dev-ops person. Setup of advanced but desirable features such as auto-scaling and load balancing are quite involved, requiring some thought about

Many of the benefits gained from container-orchestration, such as auto-scaling, self healing and minimal downtime deployments (via slots) can be gained by using PaaS services like Azure Web Apps or AWS Elastic Beanstalk and involves less configuration, making the barrier to entry and potential learning curve far lower.

Should I use containers in my project?

The honest answer is a very broad "it depends".

As with all new technologies there is a learning curve, though for containerisation this is perhaps steeper than most. It's requires a change of mindset in how your applications are constructed in the first place and debugging becomes more difficult without a UI (though this is also the case when using PaaS hosting). Given this tech will likely be powering your business or client's critical assets (e.g. website) you need to be 100% confident that you can maintain and support the setup.

If you have a large and growing team working on projects with lots of dependencies then containers should help alleviate troubleshooting issues with individual's setups. If you can justify absorbing the cost of the learning curve then perhaps containers are a good choice!

Careful consideration should be given to networking, however this should probably be left with to experienced dev-ops person with knowledge of configuring and maintaining the clusters, network rules, and deployments in order for the tools to run smoothly. Again there is potentially an up-front training cost here too.

You could avoid these complexities by opting to use container-orchestration at its most basic level – for hosting single containers – but you won't reap the full potential of the system, such as auto scaling or seamless deployments at scale. A happy middle ground for many organisations that can't afford to invest in learning, configuring and managing an orchestration tool could be utilising a PaaS platform due to its simplicity in configuration yet powerful featureset.

Finally, just because the big-guns and hipster startups are using these tools doesn't mean you need to. There are places where they fit, and others where they don't. Configuring and managing these tools can be complicated and it may be better to stick with what you're already comfortable with if it's working. If you're looking to make a change make small steps first, such as investigating PaaS, before making a giant leap into unknown scary territory.

References

- Modernize existing .NET applications with Azure cloud and Windows Containers (2nd edition) – https://docs.microsoft.com/en-us/dotnet/standard/modernize-with-azure-and-containers/